Fabulous Info About Is FPGA Better Than GPU

FPGA For AI Why Are FPGAs Better Than GPUs Applications? Xecor

FPGA vs. GPU

1. Understanding the Key Players

Alright, let's dive into the techy world of FPGAs and GPUs. It's a bit like comparing a Swiss Army knife to a specialized chef's knife. Both can cut, but they do it in very different ways and are suited for distinct tasks. The question "Is FPGA better than GPU" is complex and really depends on what you need to accomplish.

A GPU, or Graphics Processing Unit, is essentially a highly parallel processor originally designed to handle the intense calculations required for rendering graphics. Think video games, image processing, and anything visually demanding. They excel at performing the same operation on large datasets simultaneously, a concept known as Single Instruction, Multiple Data (SIMD). Imagine a massive choir all singing the same note at the same time that's kind of how a GPU works.

An FPGA, or Field-Programmable Gate Array, is a completely different beast. It's like a blank canvas of hardware that you can configure to perform specific tasks. Instead of being pre-programmed like a GPU, you actually design the hardware architecture to perfectly fit your application. This allows for extreme optimization and customization but requires a lot more expertise to implement.

So, where does the 'better' fit in? It's all about the application. Let's break down the strengths and weaknesses of each.

The Strengths & Quirks of Each Contender

2. Diving Deeper into GPU & FPGA Capabilities

GPUs shine in applications where massive parallelism is key. Machine learning training, video processing, and scientific simulations are all areas where GPUs excel. They have a relatively easy-to-use programming model (compared to FPGAs) and are widely supported by software frameworks like TensorFlow and PyTorch. Think of it as having a well-paved highway system designed for high-volume traffic.

However, GPUs aren't always the best choice. They can be power-hungry, and their performance can suffer if the workload isn't well-suited for their parallel architecture. If you need to perform a series of complex, sequential operations, a GPU might struggle.

FPGAs, on the other hand, offer unparalleled flexibility and performance for specific applications. Because you're designing the hardware architecture, you can optimize for speed, power efficiency, and latency. This makes them ideal for applications like high-frequency trading, network packet processing, and embedded systems where real-time performance is critical. It's like building a custom race car specifically for a particular track.

The trade-off with FPGAs is complexity. Programming them requires specialized knowledge of hardware description languages (HDLs) like VHDL or Verilog. The development process can be significantly longer and more challenging compared to using a GPU. Plus, the initial cost of FPGA development boards can be higher.

When Does FPGA Take the Crown?

3. Identifying the Ideal Use Cases

Let's consider some specific scenarios. Imagine you're building a system for high-frequency trading. Latency is everything. Every microsecond counts. In this case, an FPGA is likely the better choice. You can design the hardware to process market data and execute trades with minimal delay.

Or, perhaps you're developing a custom image processing algorithm for a medical device. You need to achieve high accuracy and low power consumption. Again, an FPGA could provide the optimal solution. You can tailor the hardware to the specific algorithm and minimize power usage.

However, if you're training a large neural network, a GPU is probably the way to go. The massive parallelism of a GPU is well-suited for the matrix multiplications involved in deep learning. The availability of mature software libraries and frameworks also makes GPU development much easier in this domain.

Basically, when you require ultra-low latency, deterministic behavior, and extreme customization, the answer to "Is FPGA better than GPU?" is often "yes." But when you prioritize ease of development, general-purpose parallelism, and broad software support, the GPU is usually the winner.

Should You Use An FPGA Or A Microcontroller? VHDLwhiz

The Future of Processing

4. Exploring the Best of Both Worlds

The reality is that the future of high-performance computing might involve a hybrid approach, combining the strengths of both FPGAs and GPUs. Imagine a system where a GPU handles the bulk of the processing, while an FPGA accelerates specific, performance-critical tasks.

Some companies are already exploring this approach. For example, some cloud providers offer instances that combine GPUs and FPGAs, allowing users to choose the best processing technology for each part of their workload. This allows for incredible flexibility and optimization.

As AI and machine learning continue to evolve, we're likely to see even more innovative ways to leverage both FPGAs and GPUs. The key will be understanding the strengths and weaknesses of each technology and applying them strategically.

Ultimately, the choice between an FPGA and a GPU depends entirely on the specific requirements of your application. There's no one-size-fits-all answer. Carefully consider your performance needs, power constraints, development time, and budget before making a decision. Don't be afraid to experiment and see what works best for you!

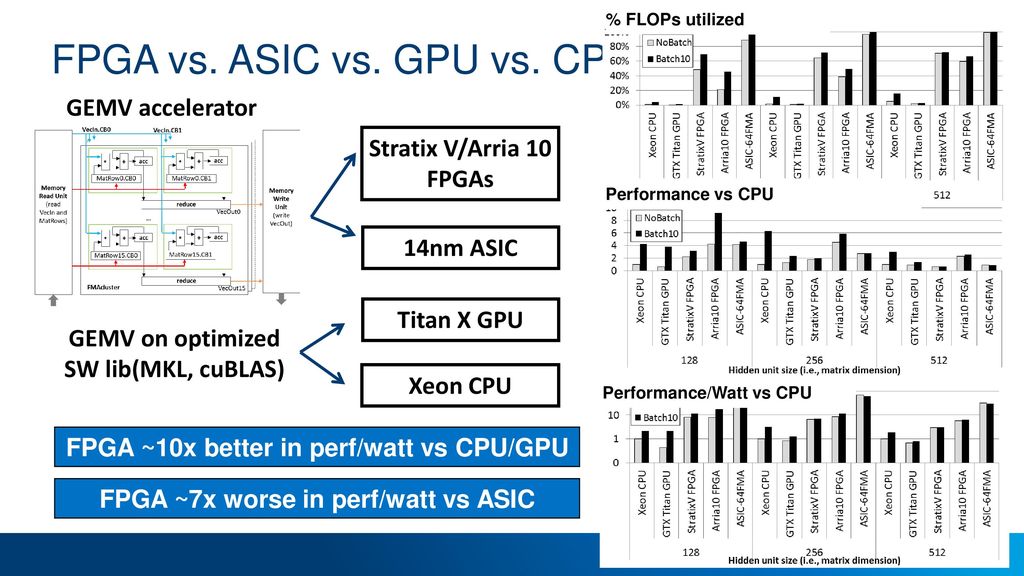

Presenter Eriko Nurvitadhi, Accelerator Architecture Intel Labs Ppt

Making the Choice

5. A Practical Guide to Decision-Making

Before you commit to either FPGA or GPU, ask yourself these crucial questions: What are your performance targets? How much latency can you tolerate? What is your power budget? How quickly do you need to develop and deploy your solution? What are your budget constraints?

If your primary concern is maximizing performance for a specific task, and you're willing to invest the time and effort to develop a custom hardware solution, an FPGA might be the right choice. However, if you need a more general-purpose solution that's easy to program and deploy, a GPU might be a better fit.

Also, consider the available tools and libraries. GPUs have a mature ecosystem of software tools and libraries that make development much easier. FPGAs, on the other hand, require specialized knowledge and tools.

Finally, don't overlook the importance of cost. FPGAs can be more expensive than GPUs, especially if you factor in the cost of development tools and expertise. However, in some cases, the performance benefits of an FPGA can justify the higher cost.

FPGA Vs. GPU Comparison For HighPerformance Computing And AI

FAQ

6. Your Burning Questions Answered

Q: Is an FPGA always faster than a GPU?A: Not necessarily. An FPGA can be faster for highly specialized tasks where you can optimize the hardware architecture. However, for general-purpose parallel processing, a GPU is often faster and easier to program.

Q: Is it harder to program an FPGA than a GPU?A: Yes, generally. Programming an FPGA requires knowledge of hardware description languages like VHDL or Verilog, while GPUs can be programmed using higher-level languages like CUDA or OpenCL.

Q: Are FPGAs more power-efficient than GPUs?A: Potentially. For specific, optimized applications, an FPGA can be more power-efficient than a GPU. However, it depends heavily on the application and the design.

Q: Can I use both an FPGA and a GPU in the same system?A: Absolutely! Hybrid systems that combine FPGAs and GPUs are becoming increasingly common, allowing you to leverage the strengths of both technologies.